The Indian Startup Making AI Fairer—Whereas Serving to the Poor

Within the shade of a coconut palm, Chandrika tilts her smartphone display to keep away from the solar’s glare. It’s early morning in Alahalli village within the southern Indian state of Karnataka, however the warmth and humidity are rising quick. As Chandrika scrolls, she clicks on a number of audio clips in succession, demonstrating the simplicity of the app she lately began utilizing. At every faucet, the sound of her voice talking her mom tongue emerges from the cellphone.

Earlier than she began utilizing this app, 30-year-old Chandrika (who, like many South Indians, makes use of the primary letter of her father’s identify, Okay., as an alternative of a final identify) had simply 184 rupees ($2.25) in her checking account. However in return for round six hours of labor unfold over a number of days in late April, she acquired 2,570 rupees ($31.30). That’s roughly the identical quantity she makes in a month of working as a trainer at a distant faculty, after the price of the three buses it takes her to get there and again. Not like her day job, the app doesn’t make her wait till the tip of the month for cost; cash lands in her checking account in only a few hours. Simply by studying textual content aloud in her native language of Kannada, spoken by round 60 million folks largely in central and southern India, Chandrika has used this app to earn an hourly wage of about $5, practically 20 occasions the Indian minimal. And in a couple of days, extra money will arrive—a 50% bonus, awarded as soon as the voice clips are validated as correct.

Learn Extra: Gig Staff Behind AI Face ‘Unfair Working Circumstances,’ Oxford Report Finds

Chandrika’s voice can fetch this sum due to the growth in synthetic intelligence (AI). Proper now, innovative AIs—for instance, massive language fashions like ChatGPT—work finest in languages like English, the place textual content and audio knowledge is ample on-line. They work a lot much less properly in languages like Kannada which, despite the fact that it’s spoken by tens of millions of individuals, is scarce on the web. (Wikipedia has 6 million articles in English, for instance, however solely 30,000 in Kannada.) After they operate in any respect, AIs in these “decrease resourced” languages will be biased—by usually assuming that docs are males and nurses ladies, for instance—and may wrestle to grasp native dialects. To create an efficient English-speaking AI, it is sufficient to merely gather knowledge from the place it has already accrued. However for languages like Kannada, it is advisable to exit and discover extra.

{Photograph} by Supranav Sprint for TIME

This has created big demand for datasets—collections of textual content or voice knowledge—in languages spoken by among the poorest folks on the planet. A part of that demand comes from tech firms in search of to construct out their AI instruments. One other large chunk comes from academia and governments, particularly in India, the place English and Hindi have lengthy held outsize priority in a nation of some 1.4 billion folks with 22 official languages and at the very least 780 extra indigenous ones. This rising demand implies that a whole bunch of tens of millions of Indians are all of a sudden in command of a scarce and newly-valuable asset: their mom tongue.

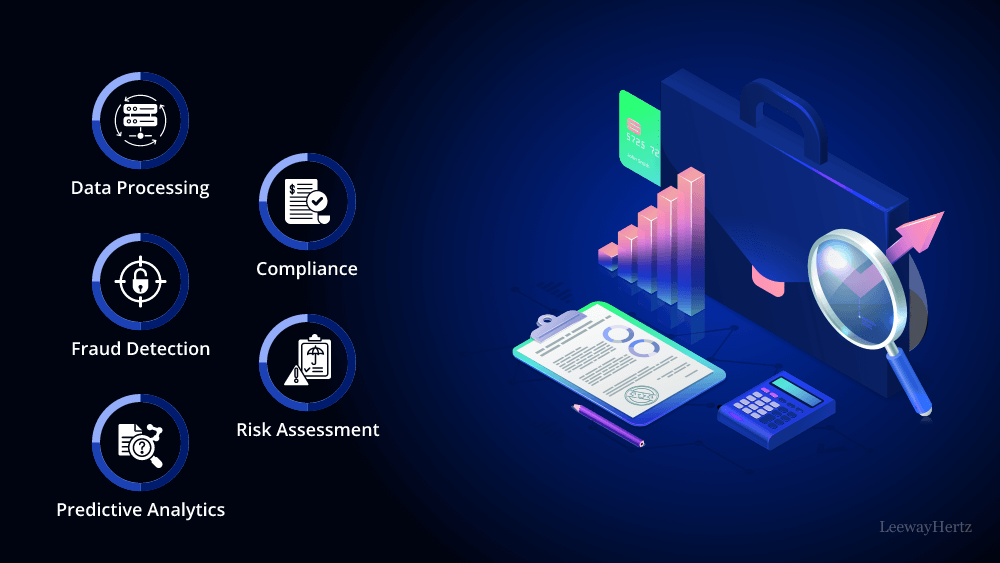

Information work—creating or refining the uncooked materials on the coronary heart of AI— just isn’t new in India. The economic system that did a lot to show name facilities and garment factories into engines of productiveness on the finish of the twentieth century has quietly been doing the identical with knowledge work within the twenty first. And, like its predecessors, the trade is as soon as once more dominated by labor arbitrage firms, which pay wages near the authorized minimal whilst they promote knowledge to international shoppers for a hefty mark-up. The AI knowledge sector, price over $2 billion globally in 2022, is projected to rise in worth to $17 billion by 2030. Little of that cash has flowed right down to knowledge staff in India, Kenya, and the Philippines.

These circumstances could trigger harms far past the lives of particular person staff. “We’re speaking about methods which can be impacting our entire society, and staff who make these methods extra dependable and fewer biased,” says Jonas Valente, an skilled in digital work platforms at Oxford College’s Web Institute. “When you’ve got staff with fundamental rights who’re extra empowered, I imagine that the end result—the technological system—can have a greater high quality as properly.”

Within the neighboring villages of Alahalli and Chilukavadi, one Indian startup is testing a brand new mannequin. Chandrika works for Karya, a nonprofit launched in 2021 in Bengaluru (previously Bangalore) that payments itself as “the world’s first moral knowledge firm.” Like its opponents, it sells knowledge to large tech firms and different shoppers on the market charge. However as an alternative of conserving a lot of that money as revenue, it covers its prices and funnels the remaining towards the agricultural poor in India. (Karya companions with native NGOs to make sure entry to its jobs go first to the poorest of the poor, in addition to traditionally marginalized communities.) Along with its $5 hourly minimal, Karya offers staff de-facto possession of the information they create on the job, so each time it’s resold, the employees obtain the proceeds on prime of their previous wages. It’s a mannequin that doesn’t exist anyplace else within the trade.

The work Karya is doing additionally implies that tens of millions of individuals whose languages are marginalized on-line might stand to realize higher entry to the advantages of know-how—together with AI. “Most individuals within the villages don’t know English,” says Vinutha, a 23-year-old pupil who has used Karya to cut back her monetary reliance on her dad and mom. “If a pc might perceive Kannada, that will be very useful.”

“The wages that exist proper now are a failure of the market,” Manu Chopra, the 27-year-old CEO of Karya, tells me. “We determined to be a nonprofit as a result of basically, you’ll be able to’t resolve a market failure out there.”

Learn Extra: 150 African Staff for ChatGPT, TikTok and Fb Vote to Unionize at Landmark Nairobi Assembly

The catch, in the event you can name it that, is that the work is supplementary. The very first thing Karya tells its staff is: This isn’t a everlasting job, however relatively a technique to shortly get an revenue enhance that may permit you to go on and do different issues. The utmost a employee can earn via the app is the equal of $1,500, roughly the common annual revenue in India. After that time, they make method for any individual else. Karya says it has paid out 65 million rupees (practically $800,000) in wages to some 30,000 rural Indians up and down the nation. By 2030, Chopra needs it to achieve 100 million folks. “I genuinely really feel that is the quickest technique to transfer tens of millions of individuals out of poverty if performed proper,” says Chopra, who was born into poverty and received a scholarship to Stanford that modified his trajectory. “That is completely a social mission. Wealth is energy. And we wish to redistribute wealth to the communities who’ve been left behind.”

Chopra isn’t the primary tech founder to rhapsodize in regards to the potential of AI knowledge work to profit the world’s poorest. Sama, an outsourcing firm that has dealt with contracts for OpenAI’s ChatGPT and Meta’s Fb, additionally marketed itself as an “moral” method for tech firms to elevate folks within the International South out of poverty. However as I reported in January, a lot of its ChatGPT staff in Kenya—some incomes lower than $2 per hour—instructed me they have been uncovered to coaching knowledge that left them traumatized. The corporate additionally carried out related content material moderation work for Fb; one employee on that mission instructed me he was fired when he campaigned for higher working circumstances. When requested by the BBC about low wages in 2018, Sama’s late founder argued that paying staff greater wages might disrupt native economies, inflicting extra hurt than good. Most of the knowledge staff I’ve spoken to whereas reporting on this trade for the previous 18 months have bristled at this logic, saying it’s a handy narrative for firms which can be getting wealthy off the proceeds of their labor.

Learn Extra: OpenAI Used Kenyan Staff on Much less Than $2 Per Hour to Make ChatGPT Much less Poisonous

Sanjana, 18, left faculty to look after her sick father. Her Karya work helps assist her household. Supranav Sprint for TIME

There’s one other method, Chopra argues. “The most important lesson I’ve discovered during the last 5 years is that each one of that is doable,” he wrote in a sequence of tweets in response to my January article on ChatGPT. “This isn’t some dream for a fictional higher world. We are able to pay our staff 20 occasions the minimal wage, and nonetheless be a sustainable group.”

It was the primary I’d heard of Karya, and my fast intuition was skepticism. Sama too had begun its life as a nonprofit centered on poverty eradication, solely to transition later to a for-profit enterprise. May Karya actually be a mannequin for a extra inclusive and moral AI trade? Even when it have been, might it scale? One factor was clear: there might be few higher testing grounds for these questions than India—a rustic the place cell knowledge is among the many least expensive on the planet, and the place it’s common for even poor rural villagers to have entry to each a smartphone and a checking account. Then there may be the potential upside: even earlier than the pandemic some 140 million folks in India survived on below $2.15 per day, in line with the World Financial institution. For these folks, money injections of the magnitude Chopra was speaking about might be life-changing.

Simply 70 miles from the bustling tech metropolis of Bengaluru, previous sugarcane fields and below the intense orange arcs of blossoming gulmohar timber, is the village of Chilukavadi. Inside a low concrete constructing, the headquarters of an area farming cooperative, a dozen women and men are gathered—all of whom have began working for Karya throughout the previous week.

Kanakaraj S., a thin 21-year-old, sits cross-legged on the cool concrete flooring. He’s finding out at a close-by faculty, and to pay for books and transport prices he often works as an off-the-cuff laborer within the surrounding fields. A day’s work can earn him 350 rupees (round $4) however this sort of handbook labor is turning into extra insufferable as local weather change makes summers right here much more sweltering than common. Working in a manufacturing unit in a close-by metropolis would imply a barely greater wage, however means hours of every day commuting on unreliable and costly buses or, worse, shifting away from his assist community to stay in dormitory lodging within the metropolis.

At Karya, Kanakaraj can earn extra in an hour than he makes in a day within the fields. “The work is sweet,” he says. “And simple.” Chopra says that’s a typical chorus when he meets villagers. “They’re completely satisfied we pay them properly,” he says, however extra importantly, “it’s that it’s not exhausting work. It’s not bodily work.” Kanakaraj was shocked when he noticed the primary cost land in his checking account. “We’ve misplaced some huge cash from scams,” he tells me, explaining that it’s common for villagers to obtain SMS texts preying on their desperation, providing to multiply any deposits they make by 10. When any individual first instructed him about Karya he assumed it was an identical con—a standard preliminary response, in line with Chopra.

Learn Extra: Huge Tech Layoffs Are Hurting Staff Far Past Silicon Valley

With so little in financial savings, native folks typically discover themselves taking out loans to cowl emergency prices. Predatory businesses are likely to cost excessive rates of interest on these loans, leaving some villagers right here trapped in cycles of debt. Chandrika, for instance, will use a few of her Karya wages to assist her household repay a big medical mortgage incurred when her 25-year-old sister fell sick with low blood strain. Regardless of the medical remedy, her sister died, leaving the household answerable for each an toddler and a mountain of debt. “We are able to determine learn how to repay the mortgage,” says Chandrika, a tear rolling down her cheek. “However we are able to’t carry again my sister.” Different Karya staff discover themselves in related conditions. Ajay Kumar, 25, is drowning in medical debt taken out to deal with his mom’s extreme again harm. And Shivanna N., 38, misplaced his proper hand in a firecracker accident as a boy. Whereas he doesn’t have debt, his incapacity means he struggles to make a residing.

The work these villagers are doing is a part of a brand new mission that Karya is rolling out throughout the state of Karnataka for an Indian healthcare NGO in search of speech knowledge about tuberculosis—a largely curable and preventable illness that also kills round 200,000 Indians yearly. The voice recordings, collected in 10 completely different dialects of Kannada, will assist practice an AI speech mannequin to grasp native folks’s questions on tuberculosis, and reply with data aimed toward decreasing the unfold of the illness. The hope is that the app, when accomplished, will make it simpler for illiterate folks to entry dependable data, with out shouldering the stigma that tuberculosis sufferers—victims of a contagious illness—typically appeal to after they search assist in small communities. The recordings will even go up on the market on Karya’s platform as a part of its Kannada dataset, on provide to the numerous AI firms that care much less in regards to the contents of their coaching knowledge than what it encodes in regards to the general construction of the language. Each time it’s resold, 100% of the income might be distributed to the Karya staff who contributed to the dataset, apportioned by the hours they put in.

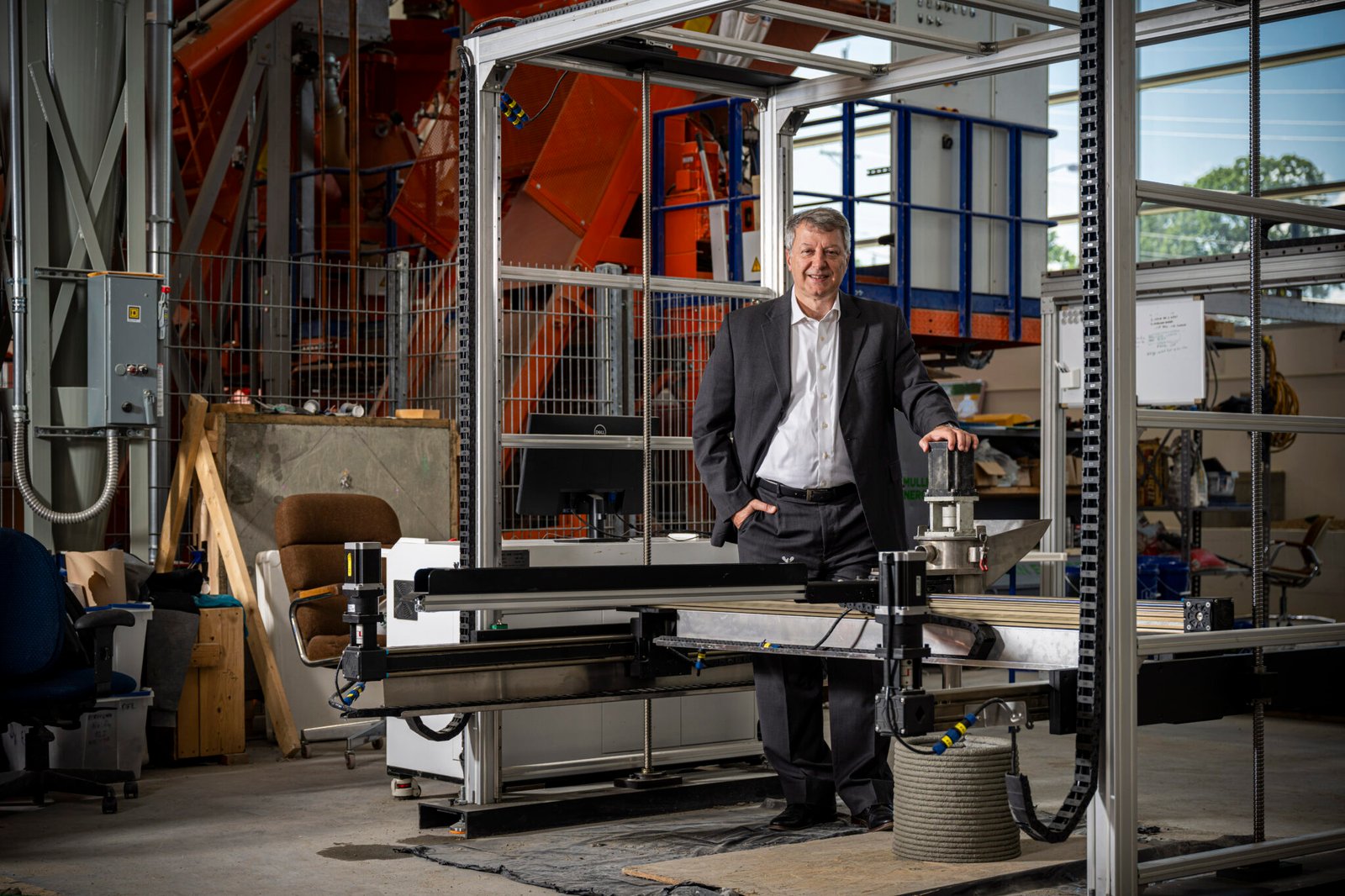

Manu Chopra, CEO of Karya Supranav Sprint for TIME

Rajamma M., a 30-year-old lady from a close-by village, beforehand labored as a COVID-19 surveyor for the federal government, going from door to door checking if folks had been vaccinated. However the work dried up in January. The cash from working for Karya, she says, has been welcome—however greater than that, she has appreciated the chance to study. “This work has given me better consciousness about tuberculosis and the way folks ought to take their drugs,” she says. “This might be useful for my job sooner or later.”

Though small, Karya already has an inventory of high-profile shoppers together with Microsoft, MIT, and Stanford. In February, it started work on a brand new mission for the Invoice and Melinda Gates Basis to construct voice datasets in 5 languages spoken by some 1 billion Indians—Marathi, Telugu, Hindi, Bengali, and Malayalam. The tip purpose is to construct a chatbot that may reply rural Indians’ questions, of their native languages and dialects, about well being care, agriculture, sanitation, banking, and profession growth. This know-how (consider it as a ChatGPT for poverty eradication) might assist share the information wanted to enhance high quality of life throughout huge swaths of the subcontinent.

“I believe there ought to be a world the place language is not a barrier to know-how—so everybody can use know-how no matter the language they converse,” says Kalika Bali, a linguist and principal researcher at Microsoft Analysis who’s working with the Gates Basis on the mission and is an unpaid member of Karya’s oversight board. She has particularly designed the prompts staff are given to learn aloud to mitigate the gender biases that always creep into datasets and thus assist to keep away from the “physician” and “nurse” downside. However it’s not simply in regards to the prompts. Karya’s comparatively excessive wages “percolate right down to the standard of the information,” Bali says. “It’ll instantly lead to higher accuracy of the system’s output.” She says she sometimes receives knowledge with a lower than 1% error charge from Karya, “which is sort of by no means the case with knowledge that we construct [AI] fashions with.”

Over the course of a number of days collectively, Chopra tells me a model of his life story that makes his path towards Karya really feel concurrently not possible and inevitable. He was born in 1996 in a basti, an off-the-cuff settlement, subsequent to a railway line in Delhi. His grandparents had arrived there as refugees from Pakistan through the partition of British India in 1947, and there the household had remained for 2 generations. Though his dad and mom have been well-educated, he says, they often struggled to place meals on the desk. He might inform when his father, who ran a small manufacturing unit making practice elements, had had a very good day at work as a result of dinner can be the comparatively costly Maggi on the spot ramen, not low-cost lentil dal. Each monsoon the basti’s gutters would flood, and his household must transfer in along with his grandmother close by for a couple of days. “I believe all of us have a eager recognition of the concept that cash is a cushion from actuality,” Chopra says of the Karya staff. “Our purpose is to present that cushion to as many individuals as doable.”

Chopra excelled on the basti’s native faculty, which was run by an NGO. When he was in ninth grade he received a scholarship to a non-public faculty in Delhi, which was working a contest to present locations to children from poor backgrounds. Although he was bullied, he acknowledges that sure privileges helped open doorways for him. “As tough as my journey was, it was considerably simpler than most individuals’s in India,” he says, “as a result of I used to be born to 2 educated dad and mom in an upper-caste household in a significant metropolis.”

When Chopra was 17, a lady was fatally gang-raped on a bus in Delhi, against the law that shocked India and the world. Chopra, who was discovering a love for pc science on the time and idolized Steve Jobs, set to work. He constructed a wristwatch-style “anti-molestation system,” which might detect an elevated coronary heart charge and administer a weak electrical shock to an attacker, with the intent to permit the sufferer time to flee. The system grabbed the eye of the media and India’s former President, Dr. A.P.J. Abdul Kalam, who inspired Chopra to use for a scholarship at Stanford. (The one factor Chopra knew about Stanford on the time, he remembers, is that Jobs studied there. Later he found even that wasn’t true.) Solely later did Chopra understand the naivety of attempting to resolve the issue of endemic sexual violence with a gadget. “Technologists are very liable to seeing an issue and constructing to resolve it,” he says. “It’s exhausting to critique an eleventh grade child, however it was a really technical answer.”

From left to proper: Santhosh, 22, Chandrika, 30, and Guruprasad, 23, show the Karya app on their telephones. Supranav Sprint for TIME Shivanna N., 38, misplaced his proper hand in an accident on the age of 8. His incapacity has made it exhausting to search out work. Supranav Sprint for TIME

As he tells it, his arrival in California was a tradition shock in additional methods than one. On his first night time, Chopra says, every pupil in his dorm defined how they deliberate to make their first billion {dollars}. Anyone instructed constructing “Snapchat for puppies,” he remembers. Everybody there aspired to be a billionaire, he realized, besides him. “Very early at Stanford, I felt alone, like I used to be within the unsuitable place,” Chopra says. Nonetheless, he had come to varsity as a “techno-utopian,” he says. That step by step fell away as he discovered at school about how IBM had constructed methods to assist Apartheid in South Africa, and different methods know-how firms had harm the world by chasing revenue alone.

Returning to India after faculty, Chopra joined Microsoft Analysis, a subsidiary of the large tech firm that offers researchers a protracted leash to work on tough social issues. Along with his colleague Vivek Seshadri, he got down to analysis whether or not it might be doable to channel cash to rural Indians utilizing digital work. Certainly one of Chopra’s first discipline visits was to a middle operated by an AI knowledge firm in Mumbai. The room was sizzling and soiled, he remembers, and stuffed with males hunched over laptops doing picture annotation work. When he requested them how a lot they have been incomes, they instructed him they made 30 rupees per hour, or simply below $0.40. He didn’t have the center to inform them the going charge for the information they have been annotating was, conservatively, 10 occasions that quantity. “I believed, this can’t be the one method this work can occur,” he says.

Chopra and Seshadri labored on the thought for 4 years at Microsoft Analysis, doing discipline research and constructing a prototype app. They found an “overwhelming enthusiasm” for the work amongst India’s rural poor, in line with a paper they revealed with 4 colleagues in 2019. The analysis confirmed Chopra and Seshadri’s suspicions that the work might be performed to to a excessive normal of accuracy even with notraining, from a smartphone relatively than a bodily workplace, and with out staff needing the power to talk English – thus making it doable to achieve not simply city-dwellers however the poorest of the poor in rural India. In 2021 Chopra and Seshadri, with a grant from Microsoft Analysis, give up their jobs to spin Karya out as an unbiased nonprofit, joined by a 3rd cofounder, Safiya Husain. (Microsoft holds no fairness in Karya.)

Not like many Silicon Valley rags-to-riches tales, Chopra’s trajectory, in his telling, wasn’t a results of his personal exhausting work. “I received fortunate 100 occasions in a row,” he says. “I’m a product of irrational compassion from nonprofits, from faculties, from the federal government—all of those locations which can be supposed to assist everybody, however they don’t. When I’ve acquired a lot compassion, the least I can do is give again.”

Not all people is eligible to work for Karya. Chopra says that originally he and his staff opened the app as much as anyone, solely to understand the primary hundred sign-ups have been all males from a dominant-caste group. The expertise taught him that “information flows via the channels of energy,” Chopra says. To succeed in the poorest communities—and marginalized castes, genders, and religions—Chopra discovered early on that he needed to staff up with nonprofits with a grassroots presence in rural areas. These organizations might distribute entry codes on Karya’s behalf in step with revenue and variety necessities. “They know for whom that cash is good to have, and for whom it’s life-changing,” he says. This course of additionally ensures extra variety within the knowledge that staff find yourself producing, which might help to attenuate AI bias.

Chopra defines this method utilizing a Hindi phrase—thairaav—a time period from Indian classical music which he interprets as a combination between “pause” and “considerate impression.” It’s an idea, he says, that’s lacking not solely from the English language, but in addition from the enterprise philosophies of Silicon Valley tech firms, who typically put scale and pace above all else. Thairaav, to him, implies that “at each step, you’re pausing and considering: Am I doing the appropriate factor? Is that this proper for the group I’m attempting to serve?” That sort of thoughtfulness “is simply lacking from a number of entrepreneurial ‘transfer quick and break issues’ conduct,” he says. It’s an method that has led Karya to flatly reject 4 provides so removed from potential shoppers to do content material moderation that will require staff to view traumatizing materials.

It’s compelling. However it’s additionally coming from a man who says he needs to scale his app to achieve 100 million Indians by 2030. Doesn’t Karya’s reliance on grassroots NGOs to onboard each new employee imply it faces a big bottleneck? Truly, Chopra tells me, the limiting issue to Karya’s growth isn’t discovering new staff. There are tens of millions who will leap on the likelihood to earn its excessive wages, and Karya has constructed a vetted community of greater than 200 grassroots NGOs to onboard them. The bottleneck is the quantity of accessible work. “What we’d like is large-scale consciousness that the majority knowledge firms are unethical,” he says, “and that there’s an moral method.” For the app to have the impression Chopra believes it might, he must win extra shoppers—to steer extra tech firms, governments, and tutorial establishments to get their AI coaching knowledge from Karya.

Madhurashree, 19, says her work for Karya has helped inform her about tuberculosis signs and precautions. Supranav Sprint for TIME

However it’s typically within the pursuit of latest shoppers that even firms that pleasure themselves on ethics can find yourself compromising. What’s to cease Karya doing the identical? A part of the reply, Chopra says, lies in Karya’s company construction. Karya is registered as a nonprofit within the U.S. that controls two entities in India: one nonprofit and one for-profit. The for-profit is legally certain to donate any income it makes (after reimbursing staff) to the nonprofit, which reinvests them. The convoluted construction, Chopra says, is as a result of Indian legislation prevents nonprofits from making any greater than 20% of their revenue from the market versus philanthropic donations. Karya does take grant funding—crucially, it covers the salaries of all 24 of its full-time workers—however not sufficient to have a completely nonprofit mannequin be doable. The association, Chopra says, has the good thing about eradicating any incentive for him or his co-founders to compromise on employee salaries or well-being in return for profitable contracts.

It’s a mannequin that works for the second, however might collapse if philanthropic funding dries up. “Karya may be very younger, and they have a number of good traction,” says Subhashree Dutta, a managing associate at The/Nudge Institute, an incubator that has supported Karya with a $20,000 grant. “They’ve the power to remain true to their values and nonetheless appeal to capital. However I don’t suppose they’ve been considerably uncovered to the dilemmas of taking the for-profit or not-for-profit stance.”

Over the course of two days with Karya staff in southern Karnataka, the constraints of Karya’s present system start to return into focus. Every employee says they’ve accomplished 1,287 duties on the app—the utmost, on the level of my go to, of the variety of duties accessible on the tuberculosis mission. It equates to about six hours of labor. The cash staff can obtain (slightly below $50 after bonuses for accuracy) is a fine addition however received’t final lengthy. On my journey I don’t meet any staff who’ve acquired royalties. Chopra tells me that Karya has solely simply amassed sufficient resellable knowledge to be engaging to patrons; it has up to now distributed $116,000 in royalties to round 4,000 staff, however the ones I’ve met are too early into their work to be amongst them.

I put to Chopra that it’s going to nonetheless take far more to have a significant impression on these villagers’ lives. The tuberculosis mission is barely the start for these staff, he replies. They’re lined as much as shortly start work on transcription duties—a part of a push by the Indian authorities to construct AI fashions in a number of regional languages together with Kannada. That, he says, will permit Karya to present “considerably extra” work to the villagers in Chilukavadi. Nonetheless, the employees are a great distance from the $1,500 that will mark their commencement from Karya’s system. Finally, Chopra acknowledges that not a single one among Karya’s 30,000 staff has reached the $1,500 threshold. But their enjoyment of the work, and their want for extra, is evident: when Seshadri, now Karya’s chief know-how officer, asks the room stuffed with staff whether or not they would really feel able to a brand new activity flagging inaccuracies in Kannada sentences, they erupt in excited chatter: a unanimous sure.

The villagers I converse to in Chilukavadi and Alahalli have solely a restricted understanding of synthetic intelligence. Chopra says this is usually a problem when explaining to staff what they’re doing. Essentially the most profitable method his staff has discovered is telling staff they’re “instructing the pc to talk Kannada,” he says. No person right here is aware of of ChatGPT, however villagers do know that Google Assistant (which they confer with as “OK Google”) works higher if you immediate it in English than of their mom tongue. Siddaraju L., a 35-year-old unemployed father of three, says he doesn’t know what AI is, however would really feel proud if a pc might converse his language. “The identical respect I’ve for my dad and mom, I’ve for my mom tongue.”

Simply as India was in a position to leapfrog the remainder of the world on 4G as a result of it was unencumbered by current cell knowledge infrastructure, the hope is that efforts like those Karya is enabling will assist Indian-language AI initiatives study from the errors of English AIs and start from a much more dependable and unbiased start line. “Till not so way back, a speech-recognition engine for English wouldn’t even perceive my English,” says Bali, the speech researcher, referring to her accent. “What’s the level of AI applied sciences being on the market if they don’t cater to the customers they’re concentrating on?”

Write to Billy Perrigo at billy.perrigo@time.com.